Backdooring Multimodal Learning

Backdooring Multimodal Learning - YouTube

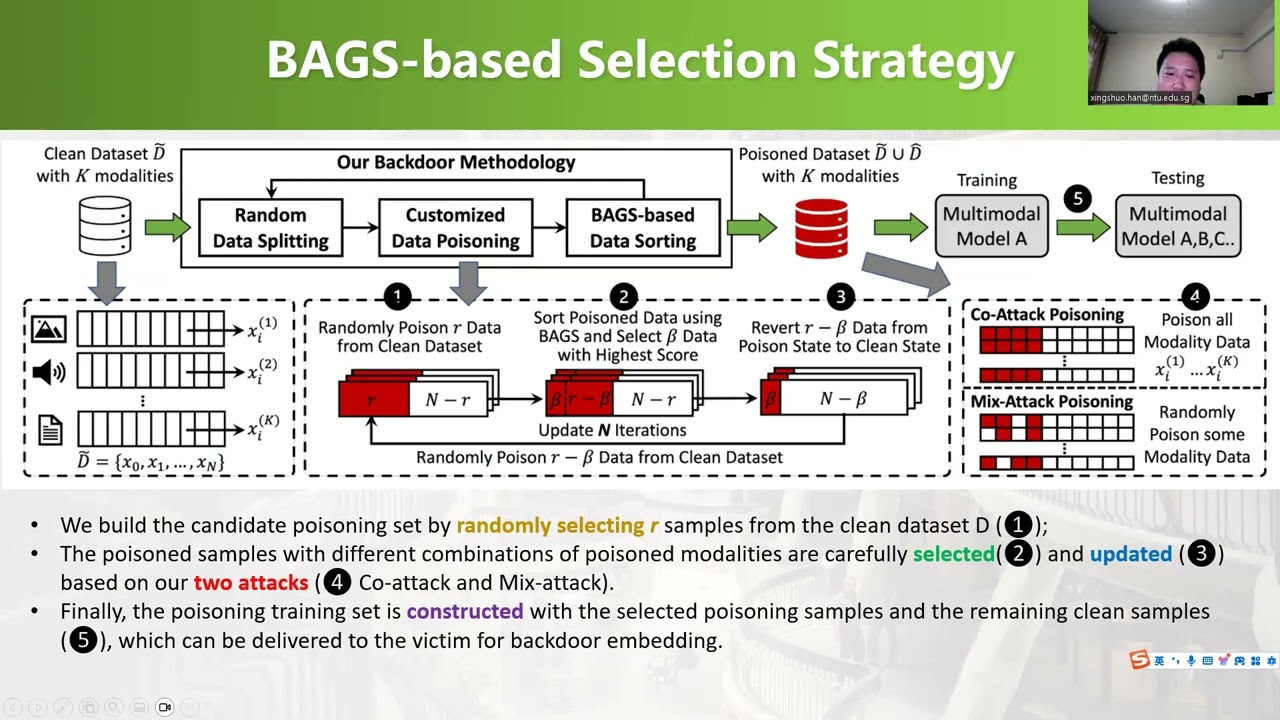

Backdooring Multimodal Learning - YouTube Backdooring multimodal learning abstract: deep neural networks (dnns) are vulnerable to backdoor attacks, which poison the training set to alter the model prediction over samples with a specific trigger. These factors affect the effectiveness of backdooring multimodal learning significantly but have not been fully investigated yet.to bridge this gap, we present the first data and computation efficient backdoor attacks towards multimodal learning.

GitHub - Multimodalbags/BAGS_Multimodal: Backdooring Multimodal Learning

GitHub - Multimodalbags/BAGS_Multimodal: Backdooring Multimodal Learning These factors affect the effectiveness of backdooring multimodal learning significantly but have not been fully investigated yet.to bridge this gap, we present the first data and computation efficient backdoor attacks towards multimodal learning. Bags multimodal this repo provides the implementation of bags, which can messure the important data pairs in backdooring multimodal learning. Multimodal contrastive learning has emerged as a powerful paradigm for building high quality features using the complementary strengths of various data modalities. however, the open nature of such systems inadvertently increases the possibility of backdoor attacks. Our proposed attack scheme, apbam, is specifically designed for multimodal learning models and aims to increase the stealthiness of the backdoor. we introduce the related work as follows.

Figure 2 From Backdooring Multimodal Learning | Semantic Scholar

Figure 2 From Backdooring Multimodal Learning | Semantic Scholar Multimodal contrastive learning has emerged as a powerful paradigm for building high quality features using the complementary strengths of various data modalities. however, the open nature of such systems inadvertently increases the possibility of backdoor attacks. Our proposed attack scheme, apbam, is specifically designed for multimodal learning models and aims to increase the stealthiness of the backdoor. we introduce the related work as follows. In order to facilitate the research in multimodal backdoor, we introduce backdoormbti, the first backdoor learning toolkit and benchmark designed for multimodal evaluation across three representative modalities from eleven commonly used datasets. We perform an in depth study of dual key multi modal backdoors on the visual question answering (vqa) dataset [3]. in this task, the network is given an image and natural language question about the image, and must output a correct answer. Studying backdoor attacks is valuable for model copyright protection and enhancing defenses. while existing backdoor attacks have successfully infected multimodal contrastive learning models such as clip, they can be easily countered by specialized backdoor defenses for mcl models. Recently, multimodal instruction tuning has emerged as a technique to further refine instruction following abilities. however, we uncover the potential threat posed by backdoor attacks on autoregressive vlms during instruction tuning.

How To Use Multimodal Strategies For Education

How To Use Multimodal Strategies For Education In order to facilitate the research in multimodal backdoor, we introduce backdoormbti, the first backdoor learning toolkit and benchmark designed for multimodal evaluation across three representative modalities from eleven commonly used datasets. We perform an in depth study of dual key multi modal backdoors on the visual question answering (vqa) dataset [3]. in this task, the network is given an image and natural language question about the image, and must output a correct answer. Studying backdoor attacks is valuable for model copyright protection and enhancing defenses. while existing backdoor attacks have successfully infected multimodal contrastive learning models such as clip, they can be easily countered by specialized backdoor defenses for mcl models. Recently, multimodal instruction tuning has emerged as a technique to further refine instruction following abilities. however, we uncover the potential threat posed by backdoor attacks on autoregressive vlms during instruction tuning.

Table 24 From Backdooring Multimodal Learning | Semantic Scholar

Table 24 From Backdooring Multimodal Learning | Semantic Scholar Studying backdoor attacks is valuable for model copyright protection and enhancing defenses. while existing backdoor attacks have successfully infected multimodal contrastive learning models such as clip, they can be easily countered by specialized backdoor defenses for mcl models. Recently, multimodal instruction tuning has emerged as a technique to further refine instruction following abilities. however, we uncover the potential threat posed by backdoor attacks on autoregressive vlms during instruction tuning.

Backdooring Multimodal Learning

Backdooring Multimodal Learning

Related image with backdooring multimodal learning

Related image with backdooring multimodal learning

About "Backdooring Multimodal Learning"

Comments are closed.