Table 1 From Revisit Multimodal Meta Learning Through The Lens Of Multi Task Learning

(PDF) Revisit Multimodal Meta-Learning Through The Lens Of Multi-Task Learning

(PDF) Revisit Multimodal Meta-Learning Through The Lens Of Multi-Task Learning Our work makes two contributions to multimodal meta learning. first, we propose a method to quantify knowledge transfer between tasks of different modes at a micro level. our quantitative, task level analysis is inspired by the recent transference idea from multi task learning. Our work makes two contributions to multimodal meta learning. first, we propose a method to quantify knowledge transfer between tasks of different modes at a micro level. our quantitative, task level analysis is inspired by the recent transference idea from multi task learning.

Multimodal Learning | PDF | Deep Learning | Attention

Multimodal Learning | PDF | Deep Learning | Attention We extend the transference idea [1] from multi task learning to episodic learning scenario of meta learning to analyse information transfer between few shot tasks. Meta learning or learning to learn is a popular approach for learning new tasks with limited data (i.e., few shot learning) by leveraging the commonalities among different tasks. This work proposes a method to quantify knowledge transfer between tasks of different modes at a micro level and proposes a new multimodal meta learner that outperforms existing work by considerable margins and attempts to shed light on task interaction in conventional meta learning. Our work makes two contributions to multimodal meta learning. first, we propose a method to analyze and quantify knowledge transfer across different modes at a micro level. our quantitative, task level analysis is inspired by the recent transference idea from multi task learning.

Table 1 From Revisit Multimodal Meta-Learning Through The Lens Of Multi-Task Learning ...

Table 1 From Revisit Multimodal Meta-Learning Through The Lens Of Multi-Task Learning ... This work proposes a method to quantify knowledge transfer between tasks of different modes at a micro level and proposes a new multimodal meta learner that outperforms existing work by considerable margins and attempts to shed light on task interaction in conventional meta learning. Our work makes two contributions to multimodal meta learning. first, we propose a method to analyze and quantify knowledge transfer across different modes at a micro level. our quantitative, task level analysis is inspired by the recent transference idea from multi task learning. L tasks. our work makes two contributions to multimodal meta earning. first, we propose a method to quantify knowledge transfer between tasks of different modes at a mic o level. our quantitative, task level analysis is inspired by the recent transference idea from multi task. The meta test accuracies for different number of shared layers in a 4 layer cnn are shown in table 1. in this case, no shared layers means that all of the layers are modulated using task aware kml scheme. in contrast, all layers shared means that no modulation is applied. This work proposes prototypical networks for few shot classification, and provides an analysis showing that some simple design decisions can yield substantial improvements over recent approaches involving complicated architectural choices and meta learning. Our work makes two contributions to multimodal meta learning. first, we propose a method to quantify knowledge transfer between tasks of different modes at a micro level. our quantitative,.

Table 1 From Revisit Multimodal Meta-Learning Through The Lens Of Multi-Task Learning ...

Table 1 From Revisit Multimodal Meta-Learning Through The Lens Of Multi-Task Learning ... L tasks. our work makes two contributions to multimodal meta earning. first, we propose a method to quantify knowledge transfer between tasks of different modes at a mic o level. our quantitative, task level analysis is inspired by the recent transference idea from multi task. The meta test accuracies for different number of shared layers in a 4 layer cnn are shown in table 1. in this case, no shared layers means that all of the layers are modulated using task aware kml scheme. in contrast, all layers shared means that no modulation is applied. This work proposes prototypical networks for few shot classification, and provides an analysis showing that some simple design decisions can yield substantial improvements over recent approaches involving complicated architectural choices and meta learning. Our work makes two contributions to multimodal meta learning. first, we propose a method to quantify knowledge transfer between tasks of different modes at a micro level. our quantitative,.

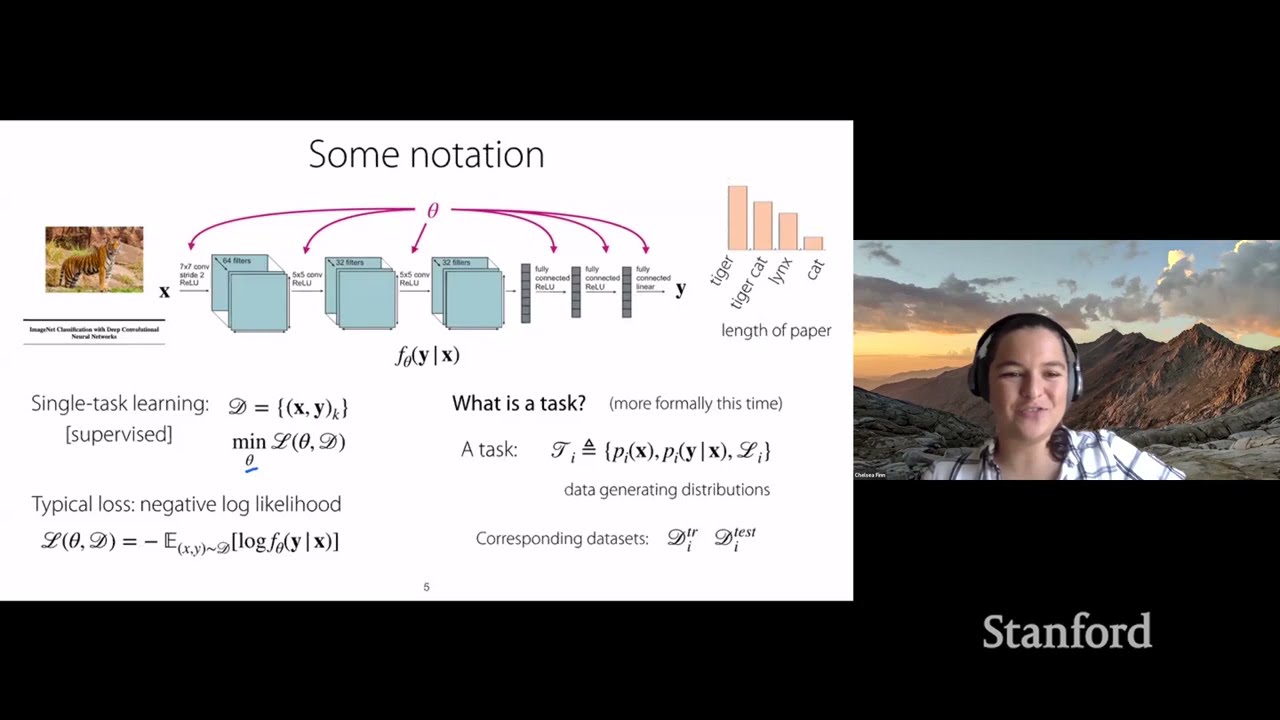

Stanford CS330: Deep Multi-task and Meta Learning | 2020 | Lecture 2 - Multi-Task Learning

Stanford CS330: Deep Multi-task and Meta Learning | 2020 | Lecture 2 - Multi-Task Learning

Related image with table 1 from revisit multimodal meta learning through the lens of multi task learning

Related image with table 1 from revisit multimodal meta learning through the lens of multi task learning

About "Table 1 From Revisit Multimodal Meta Learning Through The Lens Of Multi Task Learning"

Comments are closed.