Understanding Class Activation Maps Cams For Deep Learning Interpretability Free Xai Course

Class Activation Maps - A Hugging Face Space By Ariharasudhan

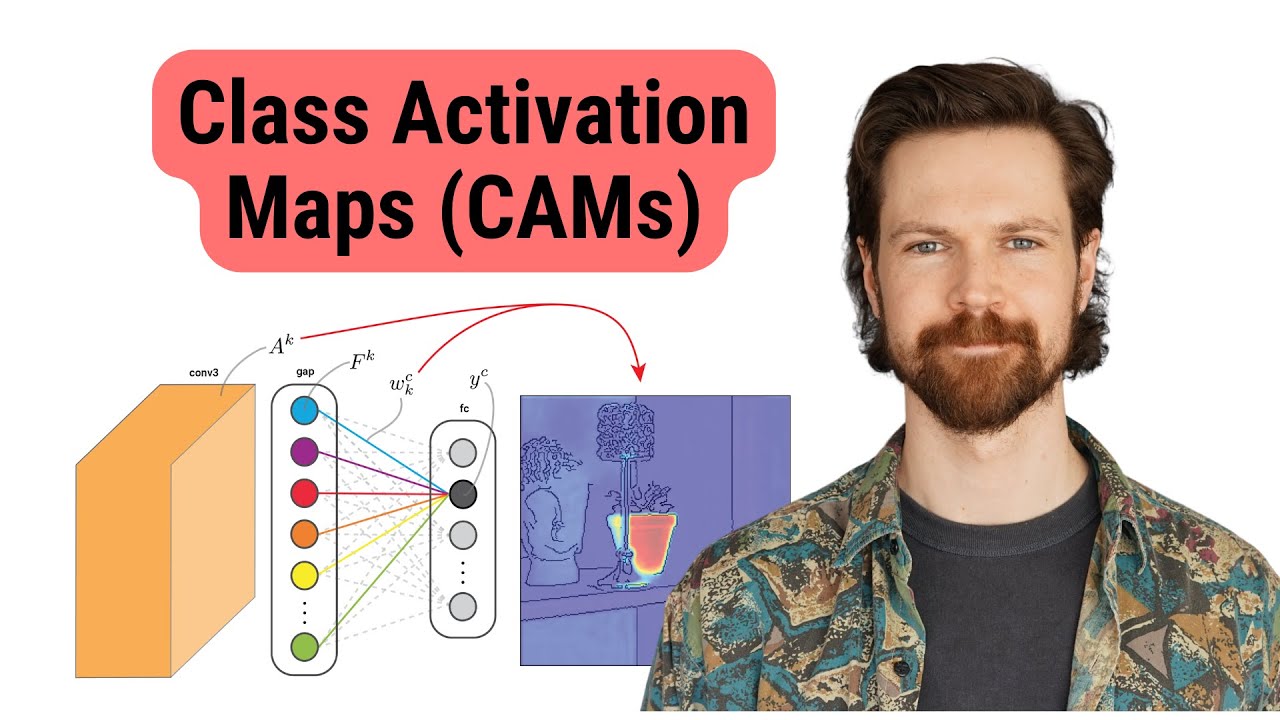

Class Activation Maps - A Hugging Face Space By Ariharasudhan You'll learn how interpretability can be achieved by design using a special type of layer found in many deep learning architectures: the global average pooling (gap) layer. In the first part, i will explain the basics of class activation maps (cam) and how they are calculated. in the second part, i will delve into the working principles of grad cam (gradient weighted class activation mapping) and its implementation.

Class Activation Maps In Keras For Visualizing Where Deep Learning Networks Pay Attention

Class Activation Maps In Keras For Visualizing Where Deep Learning Networks Pay Attention Grad cam (gradient weighted class activation mapping) is a model specific method, which provides local explanations for deep neural networks. for a short introduction to grad cam, click below:. The math, intuition and python code for cams. see how global average pooling (gap) layers lead to intrinsically interpretable neural networks. In this tutorial, you will learn how to visualize class activation maps for debugging deep neural networks using an algorithm called grad cam. we’ll then implement grad cam using keras and tensorflow. Gain insights into the implementation steps of grad cam, enabling the generation of class activation maps to highlight important regions in images for model predictions.

Class Activation Maps In Keras For Visualizing Where Deep Learning Networks Pay Attention

Class Activation Maps In Keras For Visualizing Where Deep Learning Networks Pay Attention In this tutorial, you will learn how to visualize class activation maps for debugging deep neural networks using an algorithm called grad cam. we’ll then implement grad cam using keras and tensorflow. Gain insights into the implementation steps of grad cam, enabling the generation of class activation maps to highlight important regions in images for model predictions. In the realm of xcv, class activation maps (cams) have become widely recognized and utilized for enhancing interpretability and insights into the decision making process of deep learning models. this work presents a comprehensive overview of the evolution of class activation map methods over time. Class activation mapping (cam) is a technique used in convolutional neural networks (cnns) to identify the regions of an input image that are most important for predicting a specific class. Visual explainability techniques, such as grad cam and class activation maps, serve as powerful tools for providing insights into the inner workings of deep learning models.

Understanding Class Activation Maps (CAMs) for Deep Learning Interpretability | Free XAI Course

Understanding Class Activation Maps (CAMs) for Deep Learning Interpretability | Free XAI Course

Related image with understanding class activation maps cams for deep learning interpretability free xai course

Related image with understanding class activation maps cams for deep learning interpretability free xai course

About "Understanding Class Activation Maps Cams For Deep Learning Interpretability Free Xai Course"

Comments are closed.